Guided tour in the AI transformation: A workshop report for specialists and managers

Xpert pre-release

Language selection 📢

Published on: May 10, 2025 / update from: May 10, 2025 - Author: Konrad Wolfenstein

Guided tour in the AI transformation: A workshop report for specialists and managers-Image: Xpert.digital

What managers now need to know with AI: take opportunities, manage risks, lead confidently (reading time: 32 min / no advertising / no paywall)

Master the AI revolution: an introduction to managers

Redesign the transformative power of AI: redesign work and value creation

Artificial intelligence (AI) is considered a technology that opens up like no other new possibilities to fundamentally rethink work and value creation. For companies, the integration of AI is a crucial step to remain successful and competitive in the long term because it promotes innovation, increases efficiency and increases quality. The economic and social effects of AI are considerable; It is one of the most important digital future topics, develops rapidly and harbors enormous potential. Companies are increasingly recognizing the advantages of automation and increasing efficiency by AI. This is not just a technological change, but a fundamental change in business models, process optimizations and customer interactions that make an adaptation to the need for survival in the competition.

The much -cited “transformative force” of the AI goes beyond the pure introduction of new tools; It implies a paradigm shift in strategic thinking. Managers are required to re -evaluate core processes, promise of values and even industry structures. If you only consider AI as an efficiency tool, you run the risk of overlooking your deeper strategic potential. The rapid development of AI meets an existing shortage of skilled workers. This creates a double challenge: On the one hand, there is an urgent need for quick further qualification in order to be able to use AI. On the other hand, KI offers the opportunity to automate tasks and thus potentially relieve the shortage of skilled workers in some areas, while at the same time new qualification requirements arise. This requires nuanced personnel planning on the part of the managers.

Suitable for:

- Artificial intelligence as a business booster in the company - further practical tips for the introduction of AI in companies from eleven interim managers

Weighing opportunities and risks in the AI age

Although AI systems offer highly effective opportunities, they are inextricably linked to risks that need to be managed. The discourse around AI includes considering your significant potential against inherent drives, which requires a balanced approach to use advantages and minimize disadvantages. Companies are faced with the challenge of promoting innovations and at the same time complying with data protection and ethics guidelines, which makes the balance between progress and compliance decisive.

This balancing act is not a one -off decision, but an ongoing strategic need. With the further development of AI technologies-for example from specialized AI towards more general skills-the type of opportunities and risks will also change. This requires continuous re -evaluation and adaptation of governance and strategy. The perception of the risks and advantages of AI can vary significantly within an organization. For example, active AI users tend to be more optimistic than those who have not yet introduced AI. This illustrates a critical challenge in the change management for managers: This gap in perception must be concluded by education, clear communication and the demonstration of tangible advantages with simultaneous addressing of concerns.

Understand the AI landscape: core concepts and technologies

Generative KI (Genai) and the way to artificial general intelligence (AGI)

Generative KI (Genai)

Generative KI (Genai) denotes AI models that are designed to create new content in the form of written text, audio, images or videos and offer a wide range of applications. Genai supports users in creating unique, meaningful content and can act as an intelligent question-answer system or personal assistant. Genai already revolutionizes the creation of content, marketing and customer loyalty by enables the quick production of personalized materials and the automation of answers.

The immediate accessibility and the wide range of application from Genai mean that it often represents the “entry-level AI” for many organizations. This first touch shapes perception and can drive or hinder the wider AI adaptation. Managers have to carefully control these first experiences in order to create positive dynamics.

Artificial general intelligence (agi)

Artificial general intelligence (AGI) refers to the hypothetical intelligence of a machine that is able to understand or learn any intellectual task that a person can manage and thus imitates human cognitive skills. It is about AI systems that can carry out a wide range of tasks instead of being specialized in specificized.

Real AGI currently does not exist; It remains a concept and a research goal. Openai, a leading company in this area, defines Agi as "high -autonomous systems that people exceed in most economically valuable work". By 2023, only the first of five rising AGI levels, which is referred to as "Emerging AI", were achieved.

The ambiguity and the different definitions of AGI suggest that managers agi should consider a long -term, potentially transformative horizon than as an immediate operational concern. The focus should be on using the current “powerful AI” and at the same time strategically observing the progress of the AGI. Over investments in speculative AGI scenarios could distract resources from more immediate AI opportunities. The development of specialized AI via Genai towards ongoing research on AGI implies an increasing level of autonomy and performance of AI systems. This trend correlates directly with an increasing need for robust ethical framework conditions and governance, since more powerful AI carries a greater potential for abuse or unintentional consequences.

Suitable for:

AI assistant vs. AI agent: Define roles and skills

AI assistants support people in individual tasks, react to inquiries, answer questions and give suggestions. They are typically reactive and wait for human commands. Early assistants were regularly based, but modern ones rely on machine learning (ML) or Foundation Models. In contrast, AI agents are more autonomous and able to pursue goals and make decisions independently with minimal human intervention. They are proactive, can interact with their surroundings and adapt them by learning.

The main differences lie in autonomy, task complexity, user interaction and decision skills. Assistants provide information for human decisions, while agents can make and carry out decisions. In the area of application, assistants improve customer experience, support HR tasks in bank inquiries and optimize. Agents, on the other hand, can adapt to the user behavior in real time, proactively prevent fraud and automate complex HR processes such as the talenta pancial.

The transition from AI assistants to AI agents signal a development from AI as a "tool" to AI as a "collaborator" or even as a "autonomous employee". This has profound effects on work design, team structures and the necessary skills of human employees who increasingly have to manage and work with them. Since AI agents are becoming increasingly common and are capable of making independent decisions, the "accountability gap" becomes a more pressing problem. If a AI agent makes an incorrect decision, the allocation of responsibility becomes complex. This underlines the critical necessity of a robust AI government that addresses the unique challenges of autonomous systems.

Below is a comparison of the most important distinguishing features:

Comparison of AI assistants and AI agents

This table offers managers a clear understanding of the fundamental differences in order to select the right technology for specific needs and to anticipate the different degrees of supervision and integration complexity.

The comparison between AI assistants and AI agents shows significant differences in their characteristics. While AI assistants are rather reactive and are waiting for human commands, AI agents act proactive and autonomously by acting independently. The primary function of a AI assistant lies in the execution of tasks on request, whereas a AI agent is geared towards achieving the goal. AI assistants support people in decision making, while AI agents independently make and implement decisions. The learning behavior of the two also differs: AI assistant mostly learns limited and version-based, while AI agents are adaptive and continuously learning. The main applications of AI assistants include chatbots and the information call, but the areas of application of AI agents include process automation, fraud detection and the solution of complex problems. Interaction with people requires constant input for AI assistant, while only minimal human intervention is necessary for AI agents.

The machine room: machine learning, large voice models (LLMS) and basic models

Machine learning (ml)

Machine learning is a sub -area of the AI in which computers learn from data and improve with experience without being explicitly programmed. Algorithms are trained to find patterns in large data sets and to make decisions and predictions based on this. The ML models include monitored learning (learning from marked data), insurmountable learning (finding patterns in non-marked data), partially monitored learning (mixture of marked and not marked data) and reinforcing learning (learning through experiments with rewards). ML increases efficiency, minimizes errors and supports decision -making in companies.

Understanding the different types of machine learning is not only important for managers from a technical point of view, but also for understanding the data requirements. Monitored learning, for example, requires large quantities of high -quality, marked data records, which has an impact on the data strategy and investments. Although business problem identification should be at the beginning, the applicability of a certain ML type will depend heavily on the availability and type of data.

Large voice models (LLMS)

Large voice models are a kind of deep learning algorithm that is trained in huge data records and is often used in applications of natural language processing (NLP) to respond to inquiries in natural language. Examples of this are the GPT series from Openai. LLMS can generate human -like texts, drive chatbots and support automated customer service. However, you can also take over inaccuracies and distortions from the training data and raise concerns about copyright and security.

The problem of "memorization" at LLMS, in which you literally output text from training data, harbors considerable copyright and plagiarism risks for companies that use LLM-generated content. This requires careful review processes and an understanding of the origin of LLM editions.

Base models

Basic models are large AI models that have been trained on wide data and can be adapted (fine-tuned) for a variety of downstream tasks. They are characterized by emergence (unexpected skills) and homogenization (joint architecture). They differ from classic AI models in that they are initially domestic-specific, use self-monitored learning, enable transfer learning and are often multimodal (processing of text, image, audio). LLMS are a kind of basic model. The advantages include faster market access and scalability, but challenges are transparency ("black box" problem), data protection and high costs or infrastructure requirements.

The rise of the basic models signals a change towards more versatile and more adaptable AI. However, your "black box" nature and the considerable resources that are necessary for your training or fine-tuning mean that access and control could be concentrated, which creates potentially dependencies on a few large providers. This has strategic effects on "make-or-Buy" decisions and the risk of Vendor Lock-in. The multimodal ability of many basic models opens up completely new categories of applications that can synthesize findings from different data types (e.g. analysis of text reports together with monitoring camera recordings). This goes beyond what text -focused LLMs can do and requires broader thinking about their available databases.

The regulatory compass: navigation through legal and ethical framework conditions

The EU Ki Act: core provisions and effects for companies

The EU Ki Act, which came into force on August 1, 2024, is the world's first comprehensive AI law and establishes a risk-based classification system for AI.

Risk categories:

- Inacceptable risk: AI systems that represent a clear threat to security, livelihood and rights are prohibited. Examples of this are social scoring by public authorities, cognitive manipulation of behavior and the unsolicited reading of face pictures. Most of these bans come into force until February 2, 2025.

- High risk: AI systems that negatively affect security or fundamental rights. These are subject to strict requirements, including risk management systems, data governance, technical documentation, human supervision and conformity evaluations before the market. Examples are AI in critical infrastructures, medical devices, employment and law enforcement. Most rules for high-risk AI apply from August 2, 2026.

- Limited risk: AI systems such as chatbots or those that generate deep papers must meet transparency obligations and inform users that they interact with AI or that content is AI generated.

- Minimal risk: AI systems such as spam filters or AI-based video games. The act allows free use, although voluntary behavioral skills are encouraged.

Suitable for:

The ACT determines obligations for providers, importers, traders and users (operators) of AI systems, whereby providers of high-risk systems are subject to the strictest requirements. Due to the extraterritorial application, it also affects companies outside the EU if their AI systems are used in the EU market. Specific rules apply to AI models with a general purpose (GPAI models), with additional obligations for those that are classified as "systemic risk". These rules generally apply from August 2, 2025. If non -compliance, there are considerable fines, up to 35 million euros or 7 % of the global annual turnover for prohibited applications. From February 2025, Article 4 also prescribes an adequate level of AI competence for the personnel of providers and operators of certain AI systems.

The risk-based approach of the EU AI Act requires a fundamental change in the approach of companies to the development and use of AI. It is no longer just about technical feasibility or business value; Regulatory compliance and risk reduction must be integrated from the beginning of the AI life cycle ("Compliance by Design"). The "AI competence obligation" is an important, early determination. This implies an immediate need for companies to evaluate and implement training programs, not only for technical teams, but for anyone who develops, uses or monitors AI systems. This goes beyond fundamental awareness and includes the understanding of functionalities, limits as well as ethical and legal framework. The focus of the law on GPAI models, in particular those at a systemic risk, indicates a regulatory concern about the broad and potentially unforeseen effects of these powerful, varied models. Companies that use or develop such models are subject to an intensified examination and obligations, which influences their development plans and market introductory strategies.

Overview of the risk categories of the EU KI Act and essential obligations

This table summarizes the core structure of the EU Ki Act and helps managers to quickly recognize which category their AI systems could fall into and to understand the corresponding compliance load and schedules.

An overview of the risk categories of the EU Ki Act shows that systems with an unacceptable risk, such as social scoring, cognitive manipulation and unachocated scraping of facial images, are fully banned and may no longer be applied from February 2025. Hoch-Risiko-KI, which is used, for example, in critical infrastructure, medical devices, employment, law enforcement, education or administration of migration, is subject to extensive obligations. Among other things, providers and operators must have a risk management system, data quality management and technical documentation, also ensure transparency, ensure human supervision and meet criteria such as robustness, accuracy, cyber security and conformity assessment. The corresponding measures come into force from August 2026, partly from August 2027. Limited risk concerns AI applications such as chatbots, emotion detection systems, biometric categorization systems and deeper. Transparency obligations, such as the labeling as a AI system or AI-generated content, are also considered to be effective from August 2026. There are no specific obligations for AI applications with a minimal risk, such as spam filters or AI-supported video games, with voluntary behavioral kodices being recommended. Such systems can be used immediately.

The voltage field of innovation calculation obligation: find the right balance

Companies must master the area of tension between the promotion of AI innovation and the guarantee of accountability, data protection (GDPR) and ethical use. The principles of GDPR (legality, fairness, transparency, purpose binding, data minimization, correctness, accountability) are fundamental for responsible AI and influence how AI systems are developed and used. Balance strategies include the early integration of compliance and data protection teams, regular audits, the use of external expertise and the use of specialized compliance tools. Some do not consider regulatory guidelines as innovation brakes, but as an accelerator that build up trust and increase the acceptance of new technologies.

The "tension field of innovation mandatory obligation" is not a static compromise, but a dynamic balance. Companies that proactively embed the accountability and ethical considerations in their AI innovation cycle are more likely to build sustainable, trustworthy AI solutions. This ultimately promotes major innovations by avoiding costly retrofitting, reputation damage or regulatory punishments. The challenge of maintaining an accountability is reinforced by the increasing complexity and potential "black box" nature of advanced AI models (as discussed in basic models). This requires a stronger focus on explanability techniques (Xai) and robust audit mechanisms to ensure that decisions made by AI can be understood, justified and contested if necessary.

🎯📊 Integration of an independent and cross-data source-wide AI platform 🤖🌐 for all company matters

Integration of an independent and cross-data source-wide AI platform for all company matters-Image: Xpert.digital

Ki-Gamechanger: The most flexible AI platform-tailor-made solutions that reduce costs, improve their decisions and increase efficiency

Independent AI platform: Integrates all relevant company data sources

- This AI platform interacts with all specific data sources

- From SAP, Microsoft, Jira, Confluence, Salesforce, Zoom, Dropbox and many other data management systems

- Fast AI integration: tailor-made AI solutions for companies in hours or days instead of months

- Flexible infrastructure: cloud-based or hosting in your own data center (Germany, Europe, free choice of location)

- Highest data security: Use in law firms is the safe evidence

- Use across a wide variety of company data sources

- Choice of your own or various AI models (DE, EU, USA, CN)

Challenges that our AI platform solves

- A lack of accuracy of conventional AI solutions

- Data protection and secure management of sensitive data

- High costs and complexity of individual AI development

- Lack of qualified AI

- Integration of AI into existing IT systems

More about it here:

AI strategies for managers: practical guidelines and examples

AI in action: applications, applications and effective interaction

Recognize opportunities: AI applications and applications across industries

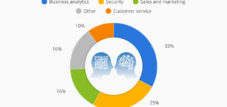

KI offers a wide range of applications, including the creation of content, personalized customer approach, process optimization in production and logistics, forward -looking maintenance as well as support in finance, human resources and IT.

Specific industry examples include:

- Automobile/production: AI and simulation in research (Arena2036), automated robot interaction (Festo), process optimization and predictive maintenance in production (Bosch).

- Financial services: Increased security by analyzing large amounts of data on suspicious transactions, automated invoice, investment analysis.

- Healthcare: faster diagnoses, extended access to care (e.g. interpretation of medical images), optimization of pharmaceutical research.

- Telecommunications: optimization of network performance, audiovisual improvements, prevention of customer migration.

- Retail/e-commerce: personalized recommendations, chatbots for customer service, automated cashier processes.

- Marketing & Sales: Content Creation (Chatgpt, Canva), optimized campaigns, customer segmentation, sales forecasts.

While many applications aim at automation and efficiency, an important emerging trend is the role of AI when improving human decision -making and enabling new forms of innovation (e.g. drug development; product development). Managers should look beyond cost reductions in order to identify AI-driven growth and innovation options. The most successful AI implementations often include the integration of AI into existing core processes and systems (e.g. SAP uses KI in corporate software, Microsoft 365 Copilot) instead of treating AI as an independent, isolated technology. This requires a holistic view of the company architecture.

Suitable for:

- Artificial intelligence: five key strategies for AI transformation-successful integration for sustainable corporate management

Master the dialogue: effective prompting for generative AI

Promptly engineering is an iterative, test -controlled process for improving the model output that requires clear goals and systematic testing. Effective prompts depend on both the content (instructions, examples, context) and the structure (order, labeling, separator).

Important components of a prompt are: goal/mission, instructions, restrictions (what to do/to do), sound/style, context/background data, fEW-shot examples, request for justification (chain-of-though) and desired reply format.

The best practices include:

- Set clear goals and use action verbs.

- Provide context and background information.

- Define the target group exactly.

- The AI tell what it shouldn't do.

- Formulate prompt, concisely, concise and with precise choice of words.

- Add output borders, especially for writing tasks.

- Assign a role (e.g. "you are a math tutor").

- Prompt chaining (use of interconnected prompt) can generate continuous ideas.

Effective prompting is less the search for a single "perfect prompt" than the development of a strategic approach for interaction with LLMS. This includes the understanding of the model skills, the iterative refinement of prompts based on the output and the use of techniques such as role allocation and chain-of-though in order to lead the AI to the desired results. It is an ability that requires exercise and critical thinking. The ability to provide relevant context and define restrictions is of the utmost importance to obtain valuable results from Genai. This means that the quality of AI-generated content is often directly proportional to the quality and specificity of the human input, which underlines the persistent importance of human expertise in the process.

Best practice for creating effective AI prompts

This table offers practical, implementable advice that managers and specialists can apply immediately to improve their interactions with generative AI tools.

In order to achieve valuable results in the use of generative AI, it is crucial to tackle specifically and clearly, to precisely define the goal and to use action verbs, such as "create a key point list that summarizes the most important results of the paper". It is just as important to provide the context, for example through the delivery of background information and relevant data such as "Based on the financial report, analyze the profitability of the past five years". The target group and the desired sound should be clearly articulated, such as "write a product description for young adults who value sustainability". The AI can also be assigned a specific role or persona, for example "You are a marketing expert. Desort a campaign for ...". With the help of FeW-Shot examples, such as “Input: Apple. Output: fruit. Input: Carrow. Output:”, the desired output format can be better illustrated. The exact formatting of the answers is also sensible to define how "format your answer in Markdown". Restrictions such as "Avoid technical jargon. The answer should no longer be more than 200 words" help to optimize the output. Iterative approach, in which prompt is adapted and refined based on the previous results, further increases the quality. Finally, the chain can be used by thoughts (chain-of-though) by asking the AI to explain its thinking process step by step, such as "explain your argument step by step".

Tackle invisible AI: Understand and manage shadow applications (shadow AI)

Schadten-Ki denotes the unauthorized or unregulated use of AI tools by employees, often to increase productivity or to avoid slow official processes. It is a subcategory of the shadow IT.

Risks of the shadow ki:

- Data security & data protection: Unauthorized tools can lead to data protection violations, the disclosure of sensitive public/company -owned data and non -compliance with GDPR/hipaa.

- Compliance & Law: violations of data protection laws, copyright problems, conflicts with freedom of information. The request of the "AI competence" of the EU Ki Act from Feb. 2025 makes the argument urgently.

- Economically/operational: inefficient parallel structures, hidden costs through individual subscriptions, lack of control over licenses, incompatibility with existing systems, disruption of work processes, reduced efficiency.

- Quality & control: lack of transparency in data processing, potential for biased or misleading results, erosion of public/internal trust.

- Undermining of governance: bypass of IT government, which makes it difficult to enforce security guidelines.

Strategies for the management of Schadten-KI:

- Development of a clear AI strategy and establishment of a responsible AI guideline.

- Provision of official, approved AI tools as alternatives.

- Definition of clear guidelines for AI use, data processing and approved tools.

- Training and sensitization of employees for responsible AI use, risks and best practices.

- Implementation of regular audits for uncovering non -authorized AI and ensuring compliance.

- Acceptance of an incremental AI government approach, starting with small steps and refinement of the guidelines.

- Promotion of cross -departmental cooperation and employee engagement.

Schadten-Ki is often a symptom for unfulfilled user needs or excessive bureaucratic processes in the introduction of technology. A purely restrictive approach ("forbid AI") can backfire. Effective management requires understanding the causes and providing practical, safe alternatives in addition to clear governance. The rise of easily accessible Genai tools (such as Chatgpt) has probably accelerated the spread of Schatten-Ki. Employees can quickly use these tools without IT participation. This makes proactive AI competence training (as required by the EU Ki Act) and clear communication via approved tools even more important.

Risks of the shadow AI and strategic reactions

This table offers a structured overview of the diverse threats from unregulated AI use and concrete, implementable strategies for managers.

The shadow AI carries numerous risks to which companies have to strategically encounter. Data leaks, unauthorized access to sensitive information or malware infections can occur in the area of data security. Strategic measures include the introduction of a AI usage guideline, the creation of a list of approved tools, the use of encryption, implementation of strict access controls and the training of employees. With regard to compliance risks, such as violations of the GDPR, violations of industry regulations or copyright infringement, regular audits, data-based data protection sequences (DSFA) for new tools, clearly defined guidelines for data processing and, if necessary, legal advice are essential. Financial risks arise from uncontrolled expenses for subscriptions, redundant licenses or inefficiencies. Therefore, companies should rely on centralized procurement, strict budget control and the regular review of tool use. Operative challenges such as inconsistent results, incompatibility with existing company systems or process disorders can be mastered by providing standardized tools, their integration into existing workflows and by continuous quality controls. Finally, reputational risks are a danger, for example the loss of customer confidence as a result of data breakdowns or incorrect AI generated communication. Transparent communication, compliance with ethics guidelines and a well-thought-out incident response plan are crucial measures to maintain trust in the company and minimize possible damage.

🎯🎯🎯 Benefit from Xpert.Digital's extensive, fivefold expertise in a comprehensive service package | R&D, XR, PR & SEM

AI & XR 3D Rendering Machine: Fivefold expertise from Xpert.Digital in a comprehensive service package, R&D XR, PR & SEM - Image: Xpert.Digital

Xpert.Digital has in-depth knowledge of various industries. This allows us to develop tailor-made strategies that are tailored precisely to the requirements and challenges of your specific market segment. By continually analyzing market trends and following industry developments, we can act with foresight and offer innovative solutions. Through the combination of experience and knowledge, we generate added value and give our customers a decisive competitive advantage.

More about it here:

How to transform the leadership and cooperation and strengthen soft skills in leadership: the human advantage in the AI age

How to transform the leadership and cooperation and strengthen soft skills in leadership: The human advantage in the AI age-Image: Xpert.digital

The human element: effects of AI on leadership, collaboration and creativity

Changing leadership in the age of AI: new requirements and competencies

The AI requires a shift in leadership to unique human skills: awareness, compassion, wisdom, empathy, social understanding, transparent communication, critical thinking and adaptability. Managers have to develop technological competence in order to make well-founded decisions about AI tools and lead teams through change. This includes the understanding of data and the critical assessment of AI-generated information.

The most important management tasks include promoting a culture of data-controlled decision-making, effective change management, dealing with ethical considerations through AI government and promoting innovation and creativity. AI can relieve managers of routine tasks so that they can concentrate on strategic and human aspects such as motivation and employee development. The new role of a "Chief Innovation and Transformation Officer" (CITO) may arise, which combines technical expertise, knowledge of behavior and strategic vision. Managers have to navigate complex ethical landscapes, promote cultural transformations, manage cooperation between people and AI, drive cross -functional integration and ensure responsible innovation.

The core challenge for managers in the AI age is not only to understand AI, but also to lead the human reaction to AI. This includes the cultivation of a learning culture, dealing with fears before loss of work and the occurrence of ethical AI use, which makes soft skills more important than ever. There is a potential discrepancy in perception of interpersonal relationships in the AI age: 82 % of the employees consider them necessary, compared to only 65 % of managers. This gap could lead to leadership strategies that invest too little in human connections and potentially impair morality and cooperation. Effective AI guidance includes a paradoxical ability set: the acceptance of data-controlled objectivity by AI while strengthening subjective human judgment, intuition and ethical argument. It is about expanding human intelligence, not making artificial intelligence.

Suitable for:

- The acceptance of the introduction of new technologies such as AI, extended & augmented reality and how this can be promoted

Transformation of teamwork: The influence of AI on collaboration and team dynamics

AI can improve teamwork by automating routine tasks and enables employees to concentrate on strategic and creative work. AI systems can support better decision-making by analyzing data and giving teams. AI tools can promote better communication and coordination, enable real-time collaboration and the exchange of information and resources. AI-based knowledge management can facilitate access to centralized knowledge, enable intelligent searches and promote the exchange of knowledge. The combination of human creative skills, judgment and emotional intelligence with the data analysis and automation skills of AI can lead to more efficient and well-founded work.

The challenges include the guarantee of data protection and ethical data handling in collaborative AI tools, the potential for "loss of competence" among employees if AI takes on too many tasks without a strategy for further qualification, and the fear that personal contacts could become less common.

While AI can improve the efficiency of the collaboration (e.g. faster information procurement, task automation), managers must actively work to maintain the quality of human interaction and team cohesion. This means designing work processes in such a way that AI team members supplemented instead of isolated and creates opportunities for real human connections. The successful integration of AI into teamwork strongly depends on trust-trust in the reliability and fairness of technology as well as trust among team members how AI-based knowledge are used. A lack of trust can lead to resistance and undergo collaborative efforts.

AI as a creative partner: expansion and redefinition of creativity in organizations

Generative AI can, if it is introduced strategically and carefully, create an environment in which human creativity and AI coexist and work together. AI can promote creativity by acting as a partner, offering new perspectives and shifting the limits of the possible in areas such as media, art and music. AI can automate routine shares of creative processes and thus release people for more conceptual and innovative work. It can also help to recognize new trends or to accelerate product development through AI-based experiments.

Ethical dilemmata and challenges arise from the fact that AI-generated content questioning traditional ideas of authorship, originality, autonomy and intention. The use of copyright-protected data for the training of AI models and the generation of potentially legal content is considerable concerns. In addition, there is a risk of excessive dependency on AI, which could potentially suppress the independent human creative exploration and competence development in the long term.

The integration of AI into creative processes is not only a question of new tools, but also a fundamental redefinition of creativity itself-towards a model of human-Ki-KO creation. This requires a change in mentality for creative professionals and their managers who emphasized working with AI as a new modality. The ethical considerations related to AI-generated content (authorship, bias, Deepfakes) mean that organizations cannot simply take over creative AI tools without robust ethical guidelines and supervision. Managers must ensure that AI is used responsibly to expand creativity, not for deception or violation.

Create order: implementation of AI government for a responsible transformation

The need for AI government: why it is important for her company

AI government ensures that AI systems are developed and used ethically, transparently and in accordance with human values and legal requirements.

Important reasons for AI government are:

- Ethical considerations: addresses the potential for biased decisions and unfair results, ensures fairness and respect for human rights.

- Legal & regulatory compliance: ensures compliance with developing AI-specific laws (such as the EU Ki Act) and existing data protection regulations (GDPR).

- Risk management: offers a framework for identifying, evaluating and controlling risks related to AI, such as loss of customer trust, loss of competence or biased decision -making processes.

- Surveillance: promotes transparency and explanability in the event of AI decisions and creates trust among employees, customers and stakeholders.

- Maximization of value: Make sure that the AI use is geared towards the business goals and its advantages are effectively implemented.

Without reasonable governance, AI can lead to unintentional damage, ethical violations, legal punishments and reputation damage.

AI government is not just a compliance or risk reduction function, but a strategic pioneer. By determining clear rules, responsibilities and ethical guidelines, organizations can promote an environment in which AI innovations can thrive responsibly, which leads to more sustainable and more trustworthy AI solutions. The need for AI government is directly proportional to the increasing autonomy and complexity of AI systems. If organizations from simple AI assistants pass to more sophisticated AI agents and basic models, the scope and strictness of governance must also be further developed in order to cope with new challenges in terms of accounting obligation, transparency and control.

Framework works and best practices for effective AI government

Governance approaches range from informal (based on corporate values) to ad hoc solutions (reaction to specific problems) to formal (comprehensive framework works).

Leading framework works (examples):

- NIST AI Risk Management Framework (AI RMF): focuses on supporting organizations in controlling AI-related risks through functions such as taxes, mapping, measuring and managing.

- ISO 42001: establishes a comprehensive AI management system that requires guidelines, risk management and continuous improvement.

- OECD AI Principles: Promote a responsible handling of AI and emphasize human rights, fairness, transparency and accountability.

Best practice for implementation:

- Building internal governance structures (e.g. AI ethics, cross-functional working groups) with clear roles and responsibilities.

- Implementation of a risk-based classification system for AI applications.

- Ensuring robust data government and management, including data quality, data protection and review for distortions.

- Implementation of compliance and conformity reviews based on relevant standards and regulations.

- Prescribe of human supervision, especially for high-risk systems and critical decisions.

- Integration of stakeholders (employees, users, investors) through transparent communication.

- Development of clear ethical guidelines and their integration into the AI development cycle.

- Investment in training courses and change management to ensure the understanding and acceptance of governance guidelines.

- Start with clearly defined applications and pilot projects, then gradually scaling.

- Management of a directory of the AI systems used in the company.

Effective AI government is not a unit solution. Organizations must adapt framework works such as the Nist AI RMF or ISO 42001 to their specific industry, size, risk to risk and the types of AI they use. A purely theoretical takeover of a framework without practical adaptation is probably not effective. The "factor of human" in AI government is just as crucial as the aspects "process" and "technology". This includes the clear assignment of accountability, comprehensive training and the promotion of a culture that appreciates ethical and responsible AI use. Without acceptance and understanding on the part of the employees, even the best-designed governance framework will fail.

Key components of a AI government framework

This table offers a comprehensive checklist and instructions for managers who want to establish or improve their AI government.

The key components of a AI government framework are crucial to ensure responsible and effective use of AI. Central principles and ethical guidelines should reflect on corporate values and orient themselves towards human rights, fairness and transparency. Roles and responsibilities are to be clearly defined; This includes a AI ethics council, data managers and model examiners, whereby tasks, decision-making powers and obligation to account must be clearly determined. Effective risk management requires the identification, evaluation and reduction of risks, such as those defined on the basis of the EU Ki Law categories. Regular risk assessments as well as the development and monitoring of reduction strategies play a central role here. Data governance ensures that aspects such as quality, data protection, security and bias recognition are taken into account, including compliance with the GDPR and measures against discrimination. Model life cycle management includes standardized processes for development, validation, use, monitoring and commissioning and places special emphasis on documentation, versioning and continuous performance monitoring. Transparency and explanability are essential to ensure the traceability of AI decisions and disclose the AI use. Compliance with legal requirements, such as the EU Ki Act and the GDPR, must also be ensured by ongoing reviews and process adjustments as well as cooperation with the legal department. Training and sharpening of consciousness for developers, users and managers promote understanding of AI bases, ethical aspects and governance guidelines. Finally, the incident reaction and remediation must be guaranteed in order to effectively address malfunctions, ethical violations or security incidents. This includes established reporting routes, escalation processes and corrective measures that enable quick and targeted intervention.

Suitable for:

- The race in the field of artificial intelligence (AI): 7 countries you should keep an eye on - Germany included - top ten tip

Take the lead: strategic imperative for the AI transformation

Cultivate AI readiness: The role of continuous learning and further qualification

In addition to specialist knowledge, managers need a strategic understanding of AI in order to effectively advance their companies. AI training for managers should cover AI bases, successful case studies, data management, ethical considerations and the identification of AI potential in their own company. From February 2, 2025, the EU Ki Act (Art. 4) prescribes a “AI competence” for personnel that is involved in the development or use of AI systems. This includes the understanding of AI technologies, application knowledge, critical thinking and legal framework conditions.

The advantages of AI training for managers include the ability to manage AI projects, to develop sustainable AI strategies, to optimize processes, to secure competitive advantages and to ensure ethical and responsible AI use. A lack of AI competence and skills are a considerable obstacle to the AI adaptation. Various training formats are available: certificate courses, seminars, online courses, presence training.

AI readiness not only means the acquisition of technical skills, but also the promotion of a way of thinking of continuous learning and adaptability in the entire organization. In view of the rapid development of AI, specific tool -based training can be out of date. Therefore, basic AI knowledge and skills for critical thinking are more permanent investments. The "AI competence obligation" from the EU Ki Act is a regulatory driver for further qualification, but organizations should see this as an opportunity and not just as a compliance load. A more AI-competent workforce is better equipped to identify innovative AI applications, use tools effectively and to understand ethical implications, which leads to better AI results overall. There is a clear connection between the lack of AI skills/understanding and the spread of shadow AI. Investments in comprehensive AI formation can directly reduce the risks associated with the non-authorized AI use by enabling employees to make informed and responsible decisions.

Chances and risks synthesize: a roadmap for sovereign AI leadership

The management of the AI transformation requires a holistic understanding of the potential of technology (innovation, efficiency, quality) and its inherent risks (ethical, legally, socially).

The proactive design of the AI trips of the organization includes sovereign AI leadership:

- Establishment of a robust AI government based on ethical principles and legal framework such as the EU Ki Act.

- Promotion of a culture of continuous learning and AI competence at all levels.

- Strategic identification and prioritization of AI applications that provide a tangible value.

- Strengthening human talents through focus on skills complemented instead of replacing and management the human effects of AI.

- Proactive management challenges such as Schatten-KI.

The ultimate goal is to use AI as a strategic pioneer for sustainable growth and competitive advantages and at the same time reduce their potential disadvantages. Real "sovereign AI leadership" goes beyond internal organizational management and includes a broader understanding of the social effects of AI and the role of the company in this ecosystem. This means participating in political discussions, contributing to the determination of ethical standards and ensuring that AI is used for the social well -being and not only for the profit. The journey of AI transformation is not linear and will contain navigation through ambiguities and unexpected challenges. Managers must therefore cultivate organizational agility and resilience so that their teams can adapt to unforeseen technological progress, regulatory changes or market -related disorders by AI.

Suitable for:

- Top ten for consulting and planning – Artificial Intelligence Overview & Tips: Various AI models and typical areas of application

Understanding and using technologies: AI bases for decision-makers

The transformation through artificial intelligence is no longer a distant vision of the future, but a current reality that challenges companies of all sizes and industries and at the same time offers immense opportunities. For specialists and managers, this means to play an active role in the design of this change in order to lift the potential of AI responsibly and to manage the associated risks confidently.

The basics of AI, from generative models to the distinction between assistants and agents to technological drivers such as machine learning and basic models, form the foundation for a deeper understanding. This knowledge is essential in order to be able to make well-founded decisions about the use and integration of AI systems.

The legal framework, in particular the EU Ki Act, sets clear guidelines for the development and application of AI. The risk-based approach and the resulting obligations, especially for high-risk systems and with regard to the required AI competence of the employees, require a proactive discussion and the implementation of robust governance structures. The area of tension between the pursuit of innovation and the need for accountability must be dissolved by an integrated strategy that sees compliance and ethics as an integral part of the innovation process.

The possible uses of AI are diverse and across industries. The identification of suitable use cases, the control of effective interaction techniques such as prompting and the conscious use of shadow applications are key competencies in order to implement the added value of AI in your own area of responsibility.

Last but not least, AI sustainably changes the way, as is guided, worked together and creativity is lived. Managers are required to adapt their skills to focus more on human skills such as empathy, critical thinking and change management and to create a culture in which people and machine work synergetically. The promotion of collaboration and the integration of AI as a creative partner require new ways of thinking and management.

Establishing a comprehensive AI government is not an optional accessory, but a strategic necessity. It creates the framework for ethical, transparent and safe use of AI, minimizes risks and builds trust in all stakeholders.

The AI transformation is a journey that requires continuous learning, adaptability and a clear vision. Specialists and managers who face these challenges and internalize the principles and practices outlined here are well equipped to design the future of their organizations, areas and teams and confidently in the age of artificial intelligence.