Published on: May 8, 2025 / update from: May 9, 2025 - Author: Konrad Wolfenstein

Tactile robotics: robot with sense of touch: The new generation of vulcan and co-research on haptic object recognition-Image: Xpert.digital

Mit system for object recognition without special sensors and the vulcan robot from Amazon

Haptic perception for machines: new standards in object recognition

In the area of robotics, the development of tactile sensor and identification systems marks a decisive progress that for the first time enables machines to not only see their surroundings, but also to “feel”. This development is exemplified by Amazon's new vulcan robot and the innovative object detection system of the MIL. Both technologies significantly expand the possible uses of robots and enable tasks that have previously been managed exclusively by people with their natural haptic perception.

Suitable for:

The Vulcan robot from Amazon: A breakthrough in the area of the tactile robot handle

Functioning and technological foundations

The Vulcan robot developed by Amazon represents significant technological progress in the field of physical artificial intelligence. Amazon describes the development itself as a “breakthrough in robotics and physical AI”. The system consists of two main components: “Stow” to stow and “pick” to remove objects. Its outstanding quality is the ability to perceive its surroundings tactile.

The technological basis for vulcans tactile skills form special power-torque sensors that look like a hockey puck and enable the robot to "feel" the power with which he can grab an object without damaging it. Adam Parness, Director of Robotics Ai at Amazon, emphasizes the uniqueness of this approach: “Vulcan is not our first robot that can move objects. But with his sense of touch - to understand his ability to understand when and how he comes into contact with an object - he opens up new possibilities for optimizing work processes and facilities”.

In order to sort objects in shelves, Vulcan uses a tool that is similar to a ruler that is glued to a hair smooth iron. With this “ruler” he pushes other objects aside to make room for new articles. The gripping arms adapt their handle thickness depending on the size and shape of the object, while integrated conveyor belts push the object into the container. To get objects out, Vulcan uses a suction gripper in combination with a camera system.

Current areas of application and performance

The vulcan robot is currently being tested in two Amazon logistics centers: in Winsen near Hamburg (Germany) and in Spokane, Washington (USA). In Washington, six Stow-Vulcan robots are active, which have already successfully stored half a million articles. Two pick-vulcans work in Winsen who have already handled 50,000 orders.

The system's performance is remarkable: Vulcan can currently handle around 75 percent of the millions of products that Amazon offers. The smallest object size that the robot can manipulate corresponds to a lipstick or a USB stick. Particularly impressive is the robot's ability to identify the objects in real time, since it is “impossible for him to memorize all the specifics of the items”, as Parness explains.

Future plans and integration into the logistics chain

Amazon plans to significantly increase the number of vulcan robots in the next few years. This year the number of vulcans in Winsen is to be increased to 60 and in Washington to 50 pieces. In the long term, it is planned to use the robots in logistics centers across Europe and the USA.

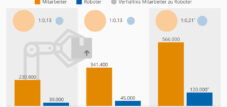

An important aspect of the Amazon strategy is the coexistence of man and machine. The company's “master plan” provides for people and machines to be working side by side in parallel. Above all, the robots should take over the products on the shelf that man does not reach without a ladder or for whom he would have to bend too much. This should lead to higher overall efficiency and at the same time reduce the workload for human employees.

The MIT system for object recognition through handling: intelligent “feel” without special sensors

Innovative approach to object recognition

In parallel to Amazon's vulcan, researchers of the MIT, from Amazon Robotics and the University of British Columbia, have developed a system that follows a different approach to give robots haptic skills. This technology enables robots to recognize properties of an object such as weight, softness or content by simply picking it up and shaking it easily - like people when dealing with unknown objects.

The special thing about this approach is that no special tactile sensors are required. Instead, the system uses the joint code already existing in most robots - sensors that capture the rotation position and speed of the joints during the movement. Peter Yichen Chen, a MIT-POSTDOC and main author of the research work, explains the vision behind the project: “My dream would be to send robots out into the world so that they touch and move things and independently find out the properties of what they interact with”.

Technical functioning and simulation models

The core of the MIT system consists of two simulation models: one that simulates the robot and its movement, and one that reproduces the dynamics of the object. Chao Liu, another mit-postdoc, emphasizes the importance of these digital twins: "An exact digital replica of the real world is really important for the success of our method".

The system uses a technology called “differentifiable simulation”, which enables the algorithm to predict how small changes in the properties of an object, such as mass or softness, influence the end position of the robot joints. As soon as the simulation matches the actual movements of the robot, the system has identified the correct properties of the object.

A decisive advantage of this method is its efficiency: the algorithm can carry out the calculations within seconds and only requires a real movement trajectory of the robot to work. This makes the system particularly inexpensive and practical for real applications.

Application potential and advantages

The developed technology could be particularly useful in applications in which cameras are less effective, such as when sorting objects in a dark basement or when the ruins room in a partially collapsed building after an earthquake.

Since the algorithm does not need an extensive data set for training, such as some methods that rely on computer vision or external sensors, it is less susceptible to errors if it is confronted with unknown environments or new objects. This makes the system particularly robust and versatile.

The broader research landscape to tactile sensors in robotics

Basic challenges and current solutions

The development of robots with sense of touch presents research with fundamental challenges. While the human tactile system is extremely complex and nuanced, artificial systems have to reproduce this with technological means. Ken Goldberg, a robotic from the University of California, Berkeley, emphasizes the complexity of this task: “The human sense of touch is incredibly nuanced and complex, with an extensive dynamic area. While robots are making progress quickly, I would be surprised to see tactile sensors at a human level in the next five to ten years.”

Despite these challenges, there are major progress in research. The Fraunhofer IFF, for example, develops tactile sensor systems that enable the reactive grasp according to the model of the human hand and are ideal for handling fragile or bending slab. The sensor data are used to adapt the gripper, component and location recognition as well as for process monitoring.

Innovative research projects in the field of tactile robotics

In addition to the developments of Amazon and MIT, there are other important research projects in the field of tactile robot sensors:

The Max Planck Institute for Intelligent Systems has developed a haptic sensor called Insight, which perceives touch with high sensitivity. Georg Martius, research group leader at the institute, emphasizes the performance of the sensor: “Our sensor shows an excellent performance thanks to the innovative mechanical design of the shell, the tailor -made imaging system inside, automatic data acquisition and thanks to the latest deep learning methods". The sensor is so sensitive that it can even feel his own orientation in relation to gravity.

Another interesting project is densepehysnet, a system that actively performs a sequence of dynamic interactions (e.g. gliding and colliding) and uses a deep predictive model about its visual observations to learn density, pixelated representations that reflect the physical properties observed objects. The experiments in both simulation and real environments show that the learned representations contain rich physical information and can be used directly for the decoding of physical object properties such as friction and mass.

Suitable for:

- Amazon and AES with the AI robot Maximo for solar module installation - solar park in half the time & counteract the shortage of skilled workers

Future prospects for tactile robot systems

Integration of multimodal sensor systems

The future of tactile robotics lies in the integration of various sensory modalities. Researchers of the work already to teach artificial intelligence, to combine senses such as seeing and touching. By understanding how these different sensory modalities work together, robots can develop a more holistic understanding of their surroundings.

The MIT team is already planning to combine your method for object recognition with computer vision in order to create multimodal sensors that is even more efficient. "This work does not try to replace computer vision. Both methods have their advantages and disadvantages. But here we have shown that we can already find out some of these properties without a camera," explains Chen.

Extended areas of application and future developments

The researchers of the MIT team also want to research applications with more complex robot systems such as soft robots, and more complex objects, including slosh fluids or granular media such as sand. In the long term, you hope to use this technology to improve robot learning to enable future robots to quickly develop new manipulation skills and to adapt to changes in your environment.

Amazon plans to further develop vulcan technology in the coming years and to use it to a larger scale. The integration of Vulcan with the company's 750,000 mobile robots indicates a comprehensive automation concept that could fundamentally change the logistics industry.

Tactile learning: When sensors give robots tact

The development of robots with sense of touch, exemplified by Amazon's vulcan and the fellow system for object recognition, marks a decisive turning point in robotics. These technologies enable robots to take on tasks that were previously reserved for people because they require a sensitivity and tactile understanding.

The different approaches-Amazon's focus on specialized sensors and the co-concept of using existing sensors for haptic conclusions-show the diversity of the research directions in this area. Both approaches have their specific strengths and areas of application.

With the progressive integration of tactical skills in robot systems, new opportunities for automation of complex tasks in logistics, production, healthcare and many other areas open up. The ability of robots not only to see their surroundings, but also to “feel”, brings us a significant step closer to a future in which robots and people can work together even closer and more intuitive.

Suitable for: