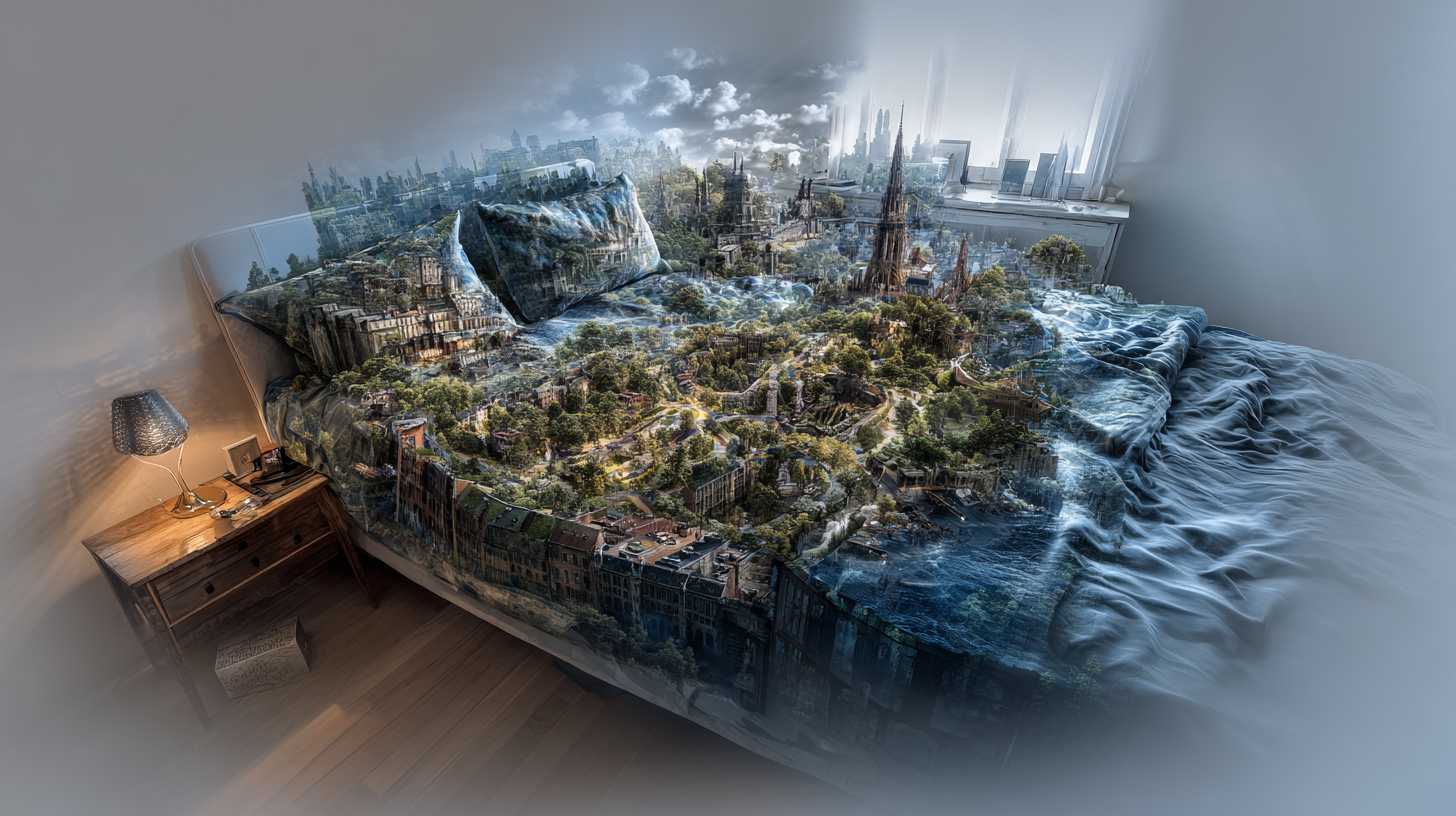

Google Genie 2 (DeepMind Genie 2) is a large "World Model" – creating interactive 3D worlds from images or text prompts – Image: Xpert.Digital

When will gamers experience their "Matrix" moment? Why DeepMind's Genie 2 is the next big leap after Gemini.

Not a product, but the future: What Google's interactive AI Genie 2 can really do – Genie 2 trains AI agents in simulated 3D worlds

Google Genie 2 (correctly: DeepMind Genie 2) is a large "World Model" that generates interactive 3D worlds from an image or text prompt, in which users or agents can act in real time via keyboard/mouse.

The current status (end of 2025): It is a research and demo system from Google DeepMind, not a freely available product, but is increasingly being shown at conferences and in the media as a building block for games, simulation and agent training.

What Genie 2 is technically

Genie 2 is a large-scale “Foundation World Model” that learned from video data to simulate physically consistent, interactive worlds (movement, collisions, NPC behavior, perspective changes).

Architecturally, it combines a video autoencoder with an autoregressive transformer in a latent space (similar to LLMs, but for video/world dynamics) and is further sampled frame by frame with actions (keyboard/mouse).

Current skills

From a single image (or an image previously generated by an image model such as Imagen), Genie 2 can generate a playable 3D scene, e.g. platforming or adventure-style environments.

The worlds remain consistent for approximately 10–60 seconds, including animations, lighting, water/particle effects, and the environment's reaction to player actions; then the scenario essentially "resets".

Uses and application areas

DeepMind positions Genie 2 primarily as a research and creative tool: rapid prototyping of interactive experiences, generation of diverse test environments for RL or agent systems (including SIMA agents).

Potential application areas include gaming, simulation/training, robotics (embodied agents) and general evaluation environments for general agents.

Availability and product status

Since its announcement on December 4, 2024, GENIE 2 has only been accessible to a select group of testers. A public release date has not yet been announced.

As of now, there is no public API or widespread product integration; Genie 2 is showcased in blog posts, papers, and demos (e.g., 60 Minutes, conferences, I/O), but remains an internal DeepMind system.

In Google's I/O 2025 coverage, Genie 2 appears alongside other generative media models like Veo and Gemini's agent capabilities, but without a separate developer release or pricing.

Google's AI model GENIE 2 creates a new reality: Fundamentals and technical basis of the model

Developed by Google DeepMind, GENIE 2 represents a significant breakthrough in the development of so-called world models. The fundamental function of this AI system is to generate fully three-dimensional, interactive environments from simple inputs such as a single image or a text description. Unlike conventional rendering engines or game engines, GENIE 2 uses an autoregressive latency diffusion model capable of generating virtual worlds frame by frame, simulating the consequences of actions within those worlds.

Genie 2 was officially announced and unveiled by Google DeepMind on December 4, 2024. The first official announcement was made via Google's official DeepMind blog. The announcement, titled " Genie 2: A large-scale foundation world model ," was published on the website deepmind.google/blog.

GENIE 2 was presented as a research prototype within a limited Research Preview. This means that the model was not made directly available to the general public, but was initially granted access only to selected researchers and creatives. Google DeepMind did not publish a full scientific research paper on GENIE 2, as was the case with its predecessor, GENIE 1.

The release of GENIE 2 coincided with a period of intensive AI development at Google. Just a few days later, on December 10, 2024, Google also announced the new Gemini 2.0 series, demonstrating that the company presented several significant next-generation AI models by the end of 2024.

What is special about the technical architecture of GENIE 2?

The technical architecture of GENIE 2 is based on several components that work together to enable the model's impressive capabilities. The system first uses a large video dataset for training and then applies a diffusion model that works with transformers and classifiers. The key to understanding this lies in the methodology: the model operates as an autoregressive system, meaning it proceeds sequentially. During inference, the system takes a single action along with the preceding latent frames and then generates the next frame. Particularly innovative is the use of classifier-free guidance, a technique that improves controllability and responsiveness to actions. The model was trained on an enormous amount of video material, which allows it to demonstrate various emergent capabilities that were not explicitly programmed.

How does GENIE 2 differ from its predecessor GENIE 1?

The difference between GENIE 1 and GENIE 2 is fundamental and marks a major leap forward in the development of world models. GENIE 1 was limited to two-dimensional environments and could only generate simple 2D platformer-like scenes. Characters were often blurry, and playability was limited to about two seconds. GENIE 2, on the other hand, works with fully three-dimensional worlds and can generate them consistently over significantly longer periods. While GENIE 1 produced highly simplified environments, GENIE 2 can render complex scenery with realistic object interactions, detailed character animations, and physically accurate behaviors. The ability to generalize has also been significantly improved, meaning that GENIE 2 can infer and understand ideas about environments, even if it has never seen them in that exact form before.

What resolution and frame rate does GENIE 2 achieve?

GENIE 2 generates interactive environments at a resolution of 720 pixels and a frame rate sufficient for interactive gaming. There are two versions of the model: an undiluted base version offering the highest possible quality, and a distilled version enabling real-time interaction, albeit with slightly reduced visual quality. This balance between quality and speed is essential for practical applications.

Capabilities and functions

What physical simulations can GENIE 2 perform?

GENIE 2 boasts an impressive array of physical simulations that set it apart from previous generations of world models. The system can realistically depict gravity, meaning objects fall when dropped. It models collisions between objects and between characters and their environment. Water effects are realistically simulated, including waves created when objects break through or move through water. Smoke and other particle effects are also generated. Furthermore, the system incorporates complex lighting simulations, realistic reflections, and shadow effects. These physical simulations aren't simply pre-programmed animations; they are calculated in real time by the neural network based on the player's actions and the current state of the scene.

How does the so-called Long Horizon Memory of GENIE 2 work?

Long Horizon Memory is one of GENIE 2's most remarkable capabilities, solving a problem that plagued previous world models. The model can remember parts of the generated world that are currently outside the user's field of view. For example, if an avatar leaves a room and later returns to the same room, the system will consistently reconstruct the room exactly as it appeared before. This is possible because the model maintains an internal memory of world states. However, this memory does have its limits: GENIE 2 can maintain consistent worlds for approximately 60 seconds. After this time, visual artifacts can appear, details are lost, and the illusion of a stable environment breaks down. In practice, most demonstrations of the system use scenes lasting between 10 and 20 seconds to showcase the best results.

What perspectives and control options does GENIE 2 offer?

GENIE 2 supports several different perspectives, allowing the user to experience the virtual world from various viewpoints. The first-person perspective offers the view from the character's point of view. The third-person perspective provides an overview of the character and their surroundings from an external viewpoint, similar to many modern video games. An isometric perspective is also available, offering a diagonal, top-down view. Control is via keyboard and mouse, enabling intuitive operation. The system intelligently identifies which element in the scene represents the character and moves them accordingly, while other elements, such as trees or clouds, remain static.

Can GENIE 2 generate worlds from real photos?

Yes, GENIE 2 can indeed use real-world photos as a starting point and transform them into interactive, three-dimensional environments. This is one of the most fascinating aspects of the technology. A real-world photo of a beach can be animated, allowing the user to walk into the water and explore the surroundings. A photo of a room can become a fully interactive 3D environment. The system must derive the depth structure from the flat image and construct a consistent, physically plausible three-dimensional world. This requires a deep understanding of spatial geometry and object relationships.

How can GENIE 2 and the SIMA agent work together?

A particularly exciting combination is the integration of GENIE 2 with DeepMind's SIMA agent, an AI system capable of performing actions in digital worlds through natural language instructions. The SIMA agent can navigate the environments generated by GENIE 2 while following natural language commands. In demonstrations, for example, the SIMA agent can understand the instruction "open blue door" and execute it in the virtual world. This synergy is very promising: GENIE 2 creates an infinite number of different training environments, while SIMA learns and acts within them. This could lead to a new paradigm in the development of capable AI agents.

Applications and practical uses

How can GENIE 2 revolutionize game development?

Game development is one of the most obvious applications for GENIE 2, and the impact could be transformative. Traditionally, game developers have to spend countless hours creating 3D models, designing landscapes, and manually programming environments. GENIE 2 could dramatically accelerate this process. Developers can input a concept drawing or a textual description, and the system will generate an instantly playable environment. This enables rapid prototyping and iterative development. Designers can quickly try out different environment variations to find out what works best. This not only saves time but can also foster creativity, as developers can test more concepts. Furthermore, GENIE 2-generated worlds could serve as a starting point for further refinement, with manual design still playing a role.

What is the significance of GENIE 2 for the training of AI agents?

Training AI agents is perhaps the most important application of GENIE 2 and the reason why Google DeepMind is focusing so much attention on this project. When training robots or other embodied AI systems, developers need millions of examples of different scenarios. Until now, these had to be collected in the real world, which is expensive and time-consuming, or limited simulated environments were used, which are not very realistic. GENIE 2 solves this problem by being able to generate an infinite number of different training scenarios. A robot could be trained in a warehouse generated by GENIE 2, in thousands of different configurations, to learn how to navigate chaotic environments. An autonomous vehicle could be trained in simulated big-city traffic, with endlessly varying scenarios. This leads to better generalization and more robust AI systems. Each generated scenario can be completely different while still remaining physically plausible and consistent.

How can GENIE 2 help with visualization and modeling?

Beyond game development and AI training, GENIE 2 also has applications in visualization and modeling. Architects could quickly transform their designs into interactive, three-dimensional models for clients to view. Businesses could visualize and optimize production processes. In education, complex concepts could be taught through interactive simulations. A biology teacher could visualize a microscopic ecosystem for students to navigate. A physics teacher could simulate physical phenomena in real time. The possibilities are virtually limitless.

What role could GENIE 2 play in medical training?

GENIE 2 could also make a significant contribution to medical education. Operational modeling in GENIE 2-generated hospital environments could help develop better systems to support physicians in their work. Medical students could train in realistic yet safe virtual environments. Various hospital configurations and emergency scenarios could be generated to improve preparation for different situations. This has the potential to significantly improve the quality of medical training without compromising the safety of real patients.

How can GENIE 2 be used in video production?

Another exciting area is the use of GENIE 2 in video production and cinematography. Filmmakers could generate input frames and then move virtual cameras through the generated worlds to create shots that would otherwise require expensive sets or elaborate CGI work. This could reduce film production costs and expand creative possibilities. A quick idea could be transformed into a finished video scene in minutes, without the need for a large production team.

🗒️ Xpert.Digital: A pioneer in the field of extended and augmented reality

World models instead of data scraping: This is how GENIE creates 2 million new AI training environments.

Limited training environments for AI

To what extent does GENIE 2 enable unlimited training environments?

The approach of unlimited training environments is transformative for AI research. Instead of AI systems repeatedly navigating the same environment and learning from limited training examples, GENIE 2 can generate 2 million different environments. This means that an AI agent never experiences the exact same situation twice. This diversity leads to better generalization because the model doesn't simply memorize behaviors for specific, known scenarios, but develops real concepts and strategies. A robot trained in thousands of different warehouse configurations will be better able to handle a new, unknown configuration than a robot trained in a single environment.

Artificial general intelligence and world models

Why does DeepMind view world models like GENIE 2 as steps on the path to AGI?

DeepMind views world models like GENIE 2 as fundamental building blocks on the path to artificial general intelligence (AGI). The reason lies in the fact that true intelligence requires an understanding of causality, physics, and consequences. A system capable of understanding and simulating complex, dynamic scenarios demonstrates a deeper understanding of the world than one that only recognizes static patterns. GENIE 2 enables AI systems to learn and operate in a wide variety of scenarios, bringing them closer to true intelligence. Furthermore, the technology could solve the problem of data discovery for training. With virtually all available websites and videos already fed into modern AI systems, a data crisis exists. GENIE 2 could generate an infinite amount of synthetic training data without relying on real-world data, thus enabling the further development of AI systems.

Limitations and challenges

What are the time limits for GENIE 2?

Although GENIE 2 is impressive, it also has significant limitations. The most important is temporal consistency. The model can maintain consistent worlds for approximately 60 seconds. After this time, visual artifacts increasingly appear, disrupting the illusion of a coherent world. This is partly due to the model's design, which generates frames sequentially and can accumulate small errors in the process. These errors are known as drift and are a well-known problem with generative models. In practice, most demonstrations of the system are kept considerably shorter, typically 10 to 20 seconds, to showcase the best results.

What problems exist with visual consistency?

A second major problem is visual consistency over longer periods. The system's memory, which retains details of the world that are not visible, works relatively well for the first few seconds, but begins to deteriorate over time or if the camera moves too far. Text rendering is another weakness. If text is meant to be present in a scene, the model often struggles to generate it correctly and legibly. This is a known issue with many generative AI models.

What are the hardware and computing power requirements for GENIE 2?

GENIE 2 is computationally intensive. The system renders very long videos in real time, which requires an enormous amount of processing power. The distilled version, which enables real-time interaction, still demands considerable computing power. The undiluted base version, which offers the highest quality, requires even more resources. This makes widespread availability and local use currently unrealistic. Users need access to powerful GPU clusters to use the system effectively.

What limitations exist regarding agent interaction?

Although AI agents can move around and perform tasks in the worlds generated by GENIE 2, their interaction possibilities are still limited. The agents cannot actively alter the world, but can only navigate and interact with it. For example, they can open doors or move objects, but they cannot make permanent changes that fundamentally alter the world. The modeling of multiple independent agents acting simultaneously in the same world is also not yet well implemented.

Current availability and future prospects

Who has access to GENIE 2?

GENIE 2 is not currently available to the general public. The system is being tested by DeepMind as part of a limited research preview, with access granted to selected researchers and creatives. This is partly for practical reasons due to the computational requirements, but also to evaluate risks and further develop the model under controlled conditions. DeepMind plans to expand access to more testers in the future, but a timeframe for a public release has not been announced.

What are the next developments and improvements?

DeepMind is actively working to overcome the limitations of GENIE 2. One important improvement could be increasing the resolution to create even more realistic environments. Expanding interaction possibilities, allowing agents to manipulate the world more extensively, is also planned. Optimizing performance to achieve faster processing speeds and lower latency would improve the user experience. Of particular importance is extending temporal consistency, ensuring that worlds remain stable over longer periods. These improvements would enable a much wider range of applications.

What future versions or successors can be expected?

There are already indications of GENIE 3, a next-generation model that is said to show improvements over GENIE 2. GENIE 3 could maintain consistent simulations for several minutes, which would be a major step forward. With further generations, the system could eventually generate consistent worlds for hours, which is necessary for many training and application scenarios. The long-term path could lead to systems capable of creating virtually unlimited, completely consistent virtual worlds that can be explored interactively by AI or humans.

Impacts on industry and society

How could GENIE 2 change the game development industry?

The impact on the game development industry could be profound. Mid-sized and smaller studios that previously lacked the resources to create large open worlds could suddenly realize such projects. Development cycles could shorten drastically. This could lead to a democratization of game development, allowing more creative voices to be heard because the technical hurdles are lowered. At the same time, established studios could dramatically streamline their processes and dedicate more time to gameplay and narrative rather than asset creation.

What implications does GENIE 2 have for robotics?

The robotics industry could be transformed by GENIE 2. Robots could be trained faster and better, leading to more intelligent and capable systems. This could be particularly relevant in logistics and manufacturing, where autonomous systems already play a major role. GENIE 2 could accelerate and improve the development of such systems.

What ethical and social questions arise?

The power of GENIE 2 also raises ethical questions. The generation of convincing virtual worlds could be used for new forms of manipulation or deception. Access to this technology is currently limited to research institutions and well-funded companies, raising questions about inequality. There are also questions about the controllability of AI systems trained in these generated worlds and whether such systems might exhibit undesirable behavior outside of these controlled environments.

GENIE 2 from DeepMind: Why this world model could be the missing piece for AGI

From data scarcity to data abundance: How GENIE 2 creates infinite training worlds

Why is GENIE 2 a milestone in AI development?

GENIE 2 is a milestone because it addresses several problems in AI research. It shows that it is possible to generate complex, dynamic virtual worlds in real time, something previously considered impossible. It demonstrates that AI can develop an understanding of physics, causality, and logical consequences. These are fundamentally important building blocks on the path to artificial general intelligence. Furthermore, GENIE 2 could solve the data problem in AI research by synthetically generating an infinite amount of realistic training data. This could usher in a new era of AI development.

How will users and developers adapt to GENIE 2?

As GENIE 2 or its successors become more widely available, developers will need to adapt and integrate these new tools into their workflows. This could create new professions, such as that of the virtual world prompt engineer, who understands how to use GENIE effectively. It could also change existing professions, as some traditional tasks are taken over by AI. Society will have to adapt to a world where photorealistic environments can be generated in seconds.

What are the other challenges on the path to even better world models?

The next major challenges are to improve temporal consistency so that worlds remain stable for hours on end. Spatial accuracy needs to be increased to better recreate real-world locations. Interaction possibilities need to be expanded so that agents can influence the world more deeply. Computational requirements need to be reduced to make the system accessible to a wider user base. Text rendering needs to be improved to generate correctly legible text in scenes.

When will we see fully realized practical applications of GENIE 2?

The reality is likely to be gradual. Research institutions will already be beginning to use GENIE 2 for specific applications such as training AI agents. Internal prototyping in game development could begin in the next few years. However, it will probably be several more years before the system is optimized enough for large-scale industrial use. The next versions, especially GENIE 3 and beyond, will be crucial.

How does GENIE 2 position itself in the context of other AI advances?

GENIE 2 doesn't stand in isolation, but is part of a broader AI revolution. It arrives at a time when models like GPT-4, Claude, and other major language models are already demonstrating impressive capabilities. It arrives at a time when text-to-image generation is becoming commonplace with models like DALL-E and Midjourney. GENIE 2 extends these capabilities into the dimensions of temporality and interactivity. It shows that AI research can generate not only static content, but also dynamic, interactive environments. This could be the beginning of a new chapter in AI history.

What is the overarching goal of Google's DeepMind with GENIE 2?

The overarching goal is ambitious: DeepMind sees GENIE 2 as a stepping stone on the path to artificial general intelligence. By creating systems that can understand and simulate complex, dynamic worlds, DeepMind believes it is laying a fundamental building block for true intelligence. Combining this with agents like SIMA could lead to autonomous AI systems capable of operating in the real world. Whether this ambitious goal will be achieved will become clear in the coming years, but GENIE 2 is undoubtedly a significant step in that direction.

Your global marketing and business development partner

☑️ Our business language is English or German

☑️ NEW: Correspondence in your national language!

I would be happy to serve you and my team as a personal advisor.

You can contact me by filling out the contact form or simply call me on +49 89 89 674 804 (Munich) . My email address is: wolfenstein ∂ xpert.digital

I'm looking forward to our joint project.

☑️ SME support in strategy, consulting, planning and implementation

☑️ Creation or realignment of the digital strategy and digitalization

☑️ Expansion and optimization of international sales processes

☑️ Global & Digital B2B trading platforms

☑️ Pioneer Business Development / Marketing / PR / Trade Fairs

🎯🎯🎯 Benefit from Xpert.Digital's extensive, five-fold expertise in a comprehensive service package | BD, R&D, XR, PR & Digital Visibility Optimization

Benefit from Xpert.Digital's extensive, fivefold expertise in a comprehensive service package | R&D, XR, PR & Digital Visibility Optimization - Image: Xpert.Digital

Xpert.Digital has in-depth knowledge of various industries. This allows us to develop tailor-made strategies that are tailored precisely to the requirements and challenges of your specific market segment. By continually analyzing market trends and following industry developments, we can act with foresight and offer innovative solutions. Through the combination of experience and knowledge, we generate added value and give our customers a decisive competitive advantage.

More about it here: