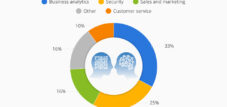

AI models in numbers: 15 large language models-149 basic models / “Foundation models”-51 Machine learning models-Image: Xpert.digital

🌟🌐 Artificial Intelligence: Advances, Importance and Applications

🤖📈 Artificial intelligence (AI) has made significant progress in recent years and has had a notable impact on various industries and research areas. In particular, the development of large language models (LLMs) and foundation models has expanded the potential and range of applications of AI technologies. In this article we take a detailed look at the current developments in the field of AI models, their importance and their possible applications.

It is important to note that the figures mentioned regarding the number and development of AI models may be subject to fluctuations, as research and technological advances in this area are developing very dynamically. Despite possible deviations, the data listed provides solid guidance and provides a clear overview of the current status of AI models as well as their growing potential and influence. They serve as a representative basis for understanding the important trends and developments in artificial intelligence.

Overview of AI models: Top 15 language models – 149 foundation models – 51 machine learning models – Image: Xpert.Digital

✨🗣️ The Top 15 Large Language Models (LLMs)

Large Language Models (LLMs) are powerful AI models specifically designed to process, understand, and generate natural language. These models are based on massive data sets and use advanced machine learning techniques to provide contextual and coherent answers to complex questions. There are currently 15 major language models that play a central role in various areas of AI technology.

Leading LLMs include models such as o1 (New), GPT-4, Gemini and Claude 3. These models have made notable advances in multimodal processing, meaning they interpret not only text but also other data formats such as audio and images and can generate. This multimodality capability opens up a variety of new applications, from image description and audio analysis to complex dialogue systems.

One particularly impressive model is Gemini Ultra, which is the first AI model to reach human levels of performance in the so-called Massive Multitask Language Understanding (MMLU) benchmark. This benchmark measures a model's ability to handle various language-based tasks simultaneously, which is important for many practical applications such as chatbots, translation systems and automated customer support solutions.

There are several dozen more well-known language models, but a precise overall overview is missing. The number is constantly growing as companies and research institutions continually develop new models and improve existing ones.

Here is the current overview of the most important top 15 language models

- o1

- GPT-4

- GPT 3.5

- Claude

- Bloom

- Cohere

- Falcon

- LLaMA

- LaMDA

- Luminous

- Orca

- Vicuna 33B

- PaLM

- Vicuna 33B

- Dolly 2.0

- Guanaco-65B

🌍🛠️ Foundation Models: The foundation of modern AI

In addition to the large language models, so-called foundation models play a crucial role in the further development of AI. Foundation models, which also include GPT-4, Claude 3 and Gemini, are extremely large AI systems that are trained on massive, often multimodal data sets. Their main advantage is that they can be applied to many different tasks without having to develop a new model each time. This flexibility and scalability make Foundation models an indispensable tool for a variety of applications in industry, science and technology.

A total of 149 Foundation models were released worldwide in 2023, more than doubling compared to 2022. This shows the rapid growth and increasing relevance of these models. It is noteworthy that around 65.7% of these models are open source models, which encourages research and development in this area. Open source models enable developers and researchers around the world to build on existing models and adapt them for their own purposes. This contributes significantly to accelerating innovations in the field of AI.

One reason foundation models are becoming more common is their ability to efficiently handle massive data sets and automate tasks that previously had to be done manually. For example, they are used in medicine to analyze large amounts of patient data and support diagnoses. In the financial industry they help with fraud detection and risk assessment, while in the automotive industry they help improve autonomous driving technologies.

🚀📈 Machine learning models: The engine of AI development

In addition to the foundation models, specialized machine learning models also play an important role in the modern AI landscape. These models are designed to solve specific problems and are often developed in close collaboration between academia and industry. According to the AI Index from the Stanford Institute for Human-Centered Artificial Intelligence (HAI), 87 machine learning models were released in 2023. This number is divided into 51 models developed by industrial companies and 15 models derived from academic research. A further 21 models were created through collaborations between science and industry.

This trend shows that the boundaries between academic research and industrial application are becoming increasingly blurred. Collaborations between science and companies lead to accelerated development of AI solutions that can be quickly put into practice. An example of this is the development of machine learning algorithms to optimize production processes in the manufacturing industry or to improve recommendation systems in the e-commerce industry.

Machine learning models are also crucial in research. They make it possible to recognize complex patterns in large amounts of data and make predictions that would hardly be possible based on traditional methods. An example of this is the application of machine learning models in genomic research, where they are used to identify genetic abnormalities and develop new treatments for rare diseases.

🌐🔀 Multimodality: The future of AI

An important trend in AI development is the increasing multimodality of models. Multimodal AI models are able to simultaneously process and combine different types of data – such as text, images, audio and even videos. This capability is a critical step toward more comprehensive and versatile AI.

An example of the application of multimodal models is automatic image description. The model analyzes the image and creates a coherent, linguistic description of what can be seen in the image. Such models find application in areas such as accessibility, where they can help visually impaired people better understand visual information. Additionally, multimodal AI models could be used in the entertainment industry to create interactive films and games that respond to users' actions and inputs.

Another field that could benefit from multimodal AI models is medical diagnostics. The simultaneous analysis of image data (e.g., X-rays), text data (e.g., patient records), and audio data (e.g., doctor's consultations) could significantlysegendiagnostic accuracy.

🛠️⚖️ Challenges and ethical aspects

However, despite the impressive progress, there are also challenges associated with the development and deployment of AI models. One of the biggest challenges is the problem of bias. AI models trained on insufficiently diverse data sets can increase bias and discrimination. This can be particularly problematic when AI is used in sensitive areas such as criminal justice or personnel recruitment.

Another aspect is the traceability and explainability of AI models. While simple machine learning models are often relatively easy to understand, complex models such as LLMs and foundation models are increasingly becoming “black boxes”. This means that it is often difficult for users to understand why the model made a certain decision. This is particularly problematic in safety-critical applications such as: B. in medicine or finance.

There is also the question of data security. Foundation models require massive amounts of data to function efficiently. This often involves personal or sensitive information. The storage and processing of this data must therefore be designed to be particularly secure in order to prevent misuse and data leaks.

🎯🧠 Potential in artificial intelligence

The rapid development of AI models, especially large language models and foundation models, impressively shows the potential that artificial intelligence has. These models have fundamentally changed the way we interact with technology and open up numerous new applications across various industries. The increasing multimodality of AI systems will play an even greater role in the coming years and enable new, innovative applications.

At the same time, however, the ethical challenges and risks associated with the use of these technologies must also be taken seriously. It is important that the development and implementation of AI systems always keep people at the center and that these technologies are used responsibly and transparently.

The future of artificial intelligence remains exciting, and it is clear that we are only at the beginning of a comprehensive transformation. AI will continue to advance at a rapid pace and play an increasingly larger role in our everyday lives and our world of work.

📣 Similar topics

- 🤖 The revolution of artificial intelligence

- 🧠 Advances in Large Language Models

- 🌐 Foundation Models: The backbone of modern AI

- 💡 Machine learning models at a glance

- 🎨 Multimodal AI and its applications

- 📉 Challenges and ethical considerations in AI

- 🚀 Future prospects of artificial intelligence

- 🏭 Applications of AI in industry

- 🔍 The influence of foundation models on research

- 🛡 Security and explainability in AI

#️⃣ Hashtags: #ArtificialIntelligence #LargeLanguageModels #FoundationModels #MachineLearning #Multimodality

📌 Other suitable topics

🌊🚀 Aleph Alpha is doing it right: Out of the Red Ocean of Artificial Intelligence

Out of the Red Ocean of artificial intelligence, into the Blue Ocean of specialization and the unique selling points of transparency, data protection and data security - Image: Xpert.Digital

Aleph Alpha is pursuing a smart change in strategy: the company is stepping out of the crowded “Red Ocean” of artificial intelligence of large AI language models and positioning itself in the “Blue Ocean” of specialization and unique USPs. As the tech giants of AI companies try to establish themselves and assert themselves in a still uncertain market, Aleph Alpha stands out from the competition with a unique approach to transparency, data protection and security. These areas play a key role in the development of AI technologies, but are often neglected by large market players in favor of rapid innovation and cost reduction.

More about it here:

We are there for you - advice - planning - implementation - project management

☑️ Industry expert, here with his own Xpert.Digital industry hub with over 2,500 specialist articles

I would be happy to serve as your personal advisor.

You can contact me by filling out the contact form below or simply call me on +49 89 89 674 804 (Munich) .

I'm looking forward to our joint project.

Xpert.Digital - Konrad Wolfenstein

Xpert.Digital is a hub for industry with a focus on digitalization, mechanical engineering, logistics/intralogistics and photovoltaics.

With our 360° business development solution, we support well-known companies from new business to after sales.

Market intelligence, smarketing, marketing automation, content development, PR, mail campaigns, personalized social media and lead nurturing are part of our digital tools.

You can find out more at: www.xpert.digital - www.xpert.solar - www.xpert.plus